IBM® announced a series of new Watson Internet of Things (IoT) offerings, capabilities, and ecosystem partners that are designed to extend the power of cognitive computing to the billions of connected devices, sensors, and systems that make up the Internet of Things.

As an engineer or a developer, you might be wondering just what you can do with the cognitive capabilities that are now available for the IBM Watson IoT Platform? Read on to learn about the Watson APIs for IoT.

Watson APIs for IoT

The Watson APIs for IoT help accelerate the development of cognitive IoT solutions and services on the IBM Watson IoT Platform. By using these APIs, you will be able to build cognitive applications that:

- Interact to with humans naturally by using both text and voice

- Understand images and recognize scenes

- Learn from sensory inputs to find meaningful patterns

- Correlate data with external data sources, such as weather or Twitter

These APIs enable cognitive capabilities in these key areas:

-

Natural language processing (NLP):

enables users to interact with systems and devices by using simple, human language. Natural language processing helps solutions to understand the intent of human language by correlating text with other sources of data to put it into context in specific situations. For example, a technician who is working on a machine notices an unusual vibration. He can ask the system “What is causing that vibration?” Using NLP and other sensor data, the system automatically links words to meaning and intent, determines the machine he is referencing, and correlates that information with recent maintenance records to identify the most likely source of the vibration and then recommend an action to reduce the vibration. -

Machine Learning:

mates data processing and continuously monitors new data and user interactions to rank data and results based on learned priorities. Machine learning can be applied to any data that comes from devices and sensors to automatically understand current conditions, what’s normal, expected trends, properties to monitor, and suggested actions to take when an issue arises. For example, the IBM Watson IoT Platform can monitor incoming data from a fleet of equipment and learn both normal and abnormal conditions. These conditions are often unique to each piece of equipment and its usage conditions, including environment and production processes. Machine learning helps understand those differences and configures the system to monitor the unique conditions of each asset -

Video and image analytics

enables users to monitor unstructured data from video feeds and image snapshots to identify scenes and patterns in video data. Apps can combine this data with machine data to gain a greater understanding of past events and emerging situations. For example, video analytics of security monitoring cameras determine that a forklift is infringing on a restricted area, which creates a minor alert in the system. Three days later, the asset in the restricted area begins to exhibit decreased performance. The two incidents can now be correlated to identify a collision between the forklift and the asset, which the correlation was not readily apparent from the video or the data from the machine. -

Text analytics

enables the mining of unstructured textual data, including transcripts from customer calls at a call center, technician maintenance logs, blog comments, and tweets, to find correlations and patterns in the vast amounts of data from these sources. For example, phrases such as “my brakes make a noise”, “my car seems slow to stop,” and “the pedal feels mushy,” that are reported through unstructured channels can be linked and correlated to identify potential brake issues in a particular make and model of a car.

A Real Customer Example

A global electronics OEM provider recently connected their device to the IBM Watson IoT Platform. By using the new Watson APIs for IBM Watson IoT Platform, they demonstrated how easy it is to build into their product an open voice interface that can be trained in specific domain content and that learns over time.

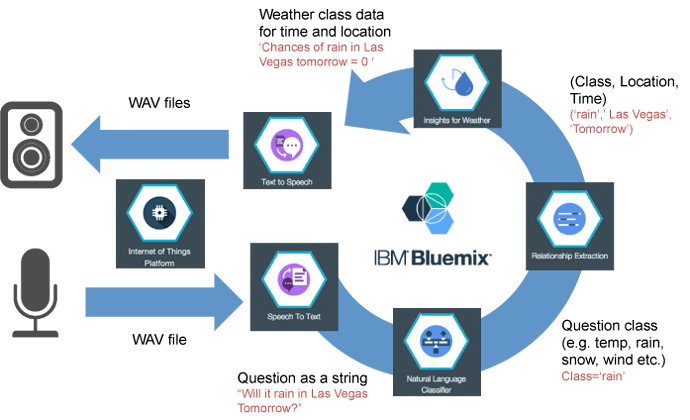

See Figure 1.

In this example, their system was able understand a verbal question such as “Will the storm hit our beaches in North Carolina this weekend?” and then provide a response based on data from The Weather Company. Such a consumer application might seem trivial if you have a modern smart phone in your pocket, but unlike today’s closed commercial systems, this application can be trained to answer domain-specific questions: “What is the risk of weather-related delays at our shipping center in Tulsa?”; “Are there any storms on the route of container ship 123?”; or “What is the weather today near oil rig 42”.

These six steps will give you a sense of how the system works:

-

Step 1.

When a user asks a question, the recording of the question (captured in a .wav file) is transferred by using the IBM Watson IoT Platform Connect service into the cloud to a Bluemix app for further analysis. The Bluemix app then uses cognitive APIs to parse the question, understand the intent, and formulate a response. (All of these APIs are described in the next steps.) -

IBM Watson IoT Platform Connect securely connects your devices to your apps that are running on the cloud by using REST or real-time API based on the MQTT or HTTP protocols.

-

Step 2.

The audio in the .wav file is converted to text by using the Watson Speech to Text service. (The resulting text is further parsed and analyzed in the next steps.) -

The Watson Speech to Text service uses self-learning machine intelligence to combine information about grammar and language structure with knowledge of the composition of the audio signal to generate an accurate transcription. The service supports several languages.

-

Step 3.

When the app receives a question about weather, it invokes the Natural Language Classifier service to understand if the question is about the weather and more specifically whether it is a question about a “rain forecast”, “snow storm”, or just a simple “temperature” request. So, if the question is “Will the storm hit our beaches in North Carolina this weekend?” the service will tell us the question is about a ‘storm’. -

The Natural Language Classifier (NLC) service interprets the intent behind text and returns a corresponding classification with associated confidence levels. In other words, it allows applications to understand the context of the question.

-

Step 4.

The app then uses the Relationship Extraction service to perform further analysis of the text to understand the composition of the question—logical entities and their relationships. So, if the question is “Will the storm hit our beaches in North Carolina this weekend?”, the Relationship Extraction service tells us that the question concerns the North Carolina coast and the time frame is Saturday to Sunday, three days from now (assuming the question is asked on Wednesday). -

The Relationship Extraction (RE) service uses machine learning and statistical modeling to perform linguistic analysis of the input text. It then finds spans of text and clusters them together to form entities, before it finally extracts the relationships between them. In other words, it understands what the question is about.

-

Step 5.

The app now easily invokes the right Weather API to get the forecast and formulate a response to the question. -

The Insights for Weather API gives us access to historical and real-time weather data from The Weather Company, which can be integrated into our Bluemix app.

- Step 6.

Now that we have the answer to our question, the app can synthesize the text into an audio file that can be streamed back to the speakers on the IoT device. - The Watson Text to Speech service provides a REST API that synthesizes speech audio from an input of plain text in several languages and voices.

How will you use these cognitive capabilities?

As IBM continues to accelerate the use of powerful cognitive capabilities, the next generation of IoT applications will be able to:

-

Understand intents by interacting with users using natural language

-

Identify reasons by applying probabilities and analytics

-

Learn as users interact with the system and as real-time conditions changes

Credits: IBM Developers